AssemblyAI's October 2025 releases: Multilingual streaming, guardrails, and LLM gateway

AssemblyAI's October 2025 releases include multilingual streaming in six languages, safety guardrails, LLM Gateway, and major improvements to Universal-2 and Slam models.

.png)

October brought major updates to AssemblyAI's Voice AI platform, from expanded language support to new safety features and significant model improvements. These updates help you ship accurate, safe, and multilingual voice applications, whether you're building voice agents, transcription tools, or conversation intelligence platforms.

Here's everything we shipped in October.

Universal-Streaming goes multilingual

Real-time transcription just got more accessible. AssemblyAI's streaming model, known for its speed and accuracy in English, now supports five additional languages: Spanish, French, German, Italian, and Portuguese.

You can now build voice applications that handle multilingual conversations in real time without sacrificing accuracy or low latency. To use it, simply set your speech model to universal_streaming_multilingual in your API request.

The addition of multilingual streaming is particularly valuable for customer support platforms, live captioning systems, and voice agents that serve global audiences. You no longer need to route different languages to different models or compromise on performance.

Speech Understanding

Complex speech tasks just got simpler. The new Speech Understanding features consolidates multiple audio intelligence tasks, like speaker identification, custom formatting, and translation, into a single API request.

Previously, building features like "transcribe this meeting, identify each speaker, and translate it to Spanish" meant managing multiple API calls or extensive prompt engineering. Speech Understanding handles all of that in one go. You define what you need, and AssemblyAI's models take care of the orchestration.

This streamlines development workflows and reduces the complexity of building sophisticated voice applications. Instead of managing multi-step processes and refining prompts endlessly, you can focus on your core product features.

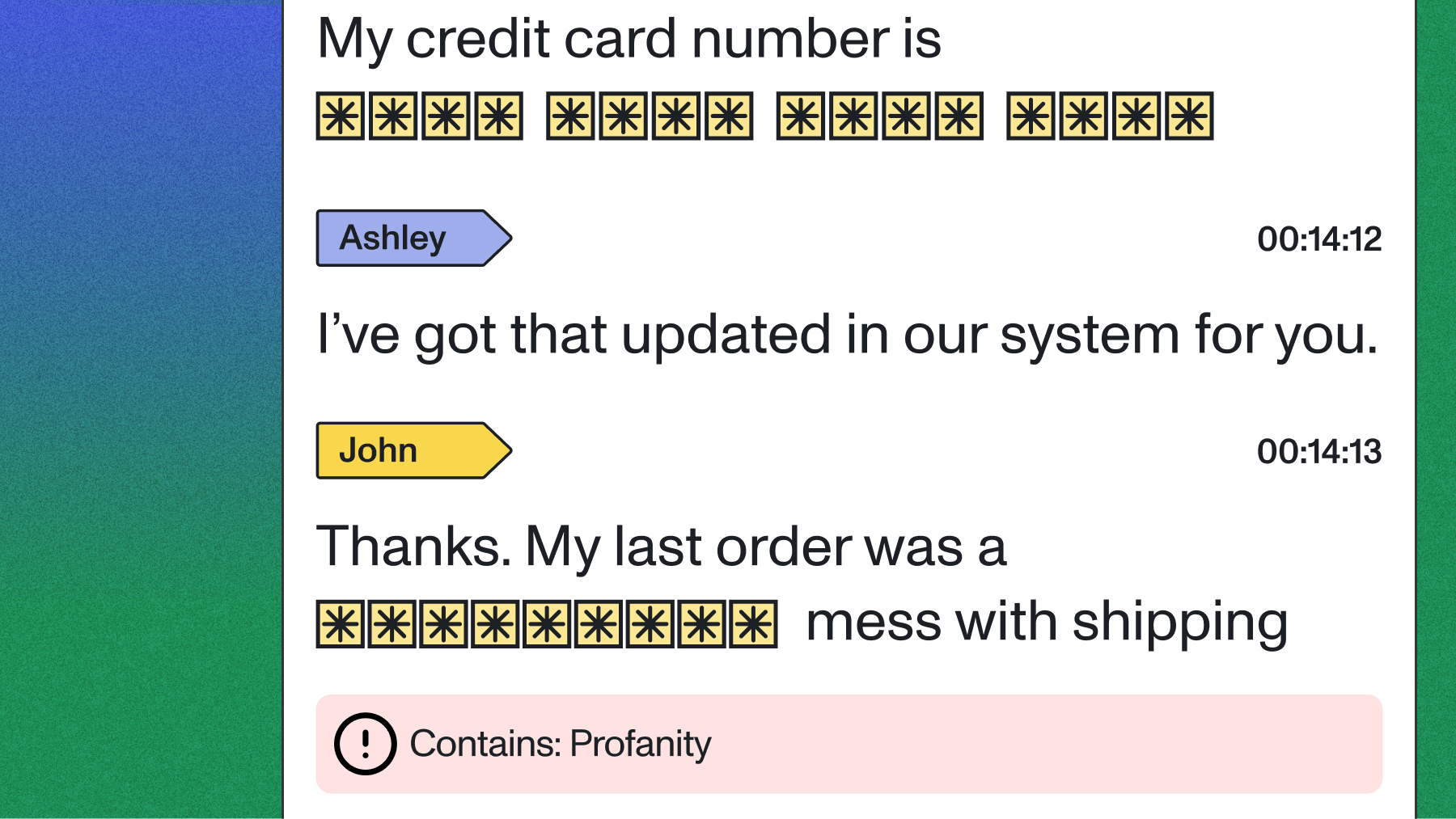

Safety guardrails for Voice AI

When shipping voice AI applications to end users, output safety isn't optional, it's essential. October's release introduced several guardrails to help you maintain safe, compliant voice experiences:

Profanity filtering automatically detects and filters inappropriate language from transcripts, giving you control over what reaches your users.

PII redaction identifies and removes personally identifiable information like phone numbers, email addresses, and social security numbers—critical for compliance with privacy regulations.

Content moderation flags potentially harmful content across categories, allowing you to review or filter outputs before they reach users.

Speech threshold helps you distinguish between actual speech and background noise, reducing false transcriptions and improving overall accuracy.

These features work together to keep your customers safe and your applications compliant, whether you're building healthcare documentation tools, customer service platforms, or educational applications.

LLM Gateway

Managing multiple LLM providers just got easier. LLM Gateway gives you access to the latest large language models from various providers through a single, unified interface.

The benefits are immediate: you can A/B test different models during development without managing separate accounts for each provider. Need to compare GPT-4 with Claude or Gemini? Just switch a parameter. This accelerates development and makes it simpler to find the right model for your specific use case.

LLM Gateway eliminates the operational overhead of maintaining integrations with multiple LLM providers while giving you the flexibility to use whichever model works best for your application.

Model improvements: Universal-2 and Slam

AssemblyAI's core models got significant upgrades in October:

Universal-2 now supports automatic code switching—seamlessly handling conversations where speakers switch between languages mid-sentence. It also expanded its vocabulary with support for 200 key terms, improving accuracy on domain-specific terminology.

Slam saw major improvements in accuracy and context handling. The model's now 57% more accurate on critical terms and supports 1,000-word context-aware prompting. This means Slam can better understand industry-specific jargon, technical terms, and proper nouns that are crucial for specialized applications.

Improved speaker labels

Speaker diarization—the ability to identify "who spoke when" in audio—received a substantial accuracy boost. The updated speaker labels model delivers 64% fewer speaker counting errors with our improved speaker labels model.

This improvement's particularly impactful for applications like meeting transcription, podcast analysis, and conversation intelligence platforms where accurately attributing speech to the correct speaker is fundamental to the user experience.

What this means for developers

These updates make Voice AI more accessible, accurate, and safe. From real-time streaming in six languages to content moderation and enhanced speaker identification, October's releases help you ship better voice products with built-in safety features and improved accuracy across specialized use cases.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.