Speech Understanding tasks explained: Speaker ID, custom formatting, and translation

Learn how Speech Understanding tasks—speaker identification, custom formatting, and translation—transform raw transcripts into structured, actionable data without manual post-processing.

The real cost of transcription isn't the API call, but the 20-30% of time your team spends manually processing the output.

You get back a transcript, but it's labeled "Speaker A" and "Speaker B." Phone numbers are spelled out. Dates appear in inconsistent formats. If you're serving global customers, you need translations on top of transcriptions. Each of these gaps requires manual post-processing or custom code before the transcript becomes usable data.

Speech Understanding tasks solve this by transforming raw transcripts into structured, actionable intelligence automatically. Rather than treating transcription as an isolated step that produces generic text, Speech Understanding applies specialized processing during transcription to deliver outputs that match your specific business needs. This article covers three critical tasks: advanced speaker identification, custom formatting, and translation.

The gap between transcription and usable data

Traditional speech-to-text gives you words on a page, but most applications need more than that.

Consider a healthcare platform processing patient consultations. A basic transcript might show "Speaker A said this, Speaker B said that." But the system needs to know which speaker is the dietitian and which is the patient, not just for human review, but for automated chart updates and compliance tracking. Manual speaker identification can consume 20-30% of a clinician's documentation time.

Or take a call center handling thousands of daily interactions. Raw transcripts need to be ingested into CRM systems that expect specific data formats. When email addresses transcribe as "john at company dot com" instead of "john@company.com," automated workflows break. Teams spend hours reformatting transcripts before they can be processed.

Global companies face an additional challenge: serving customers across multiple languages without building separate transcription pipelines for each market. The traditional approach—transcribe in the source language, then translate—adds latency, cost, and complexity.

Speech Understanding tasks address these gaps by building intelligence directly into the transcription process.

Advanced speaker identification

Basic speaker diarization tells you when speakers change. Advanced speaker identification tells you who's speaking.

The distinction matters for three reasons: compliance, automation, and analytics.

Compliance requirements in industries like healthcare and finance mandate knowing exactly who said what. A call center needs to verify that the authorized account holder, not just "Speaker A"—provided consent. Legal intake systems need to attribute statements to specific parties for case documentation.

Automation breaks when systems can't distinguish speakers by role. Healthcare platforms that auto-update patient charts need to know which statements came from the clinician versus the patient. Contact centers building agent performance scorecards need accurate agent identification, not generic speaker labels.

Analytics lose value when aggregated across anonymous speakers. Without knowing who each speaker represents, you can't identify coaching opportunities, track sentiment by role, or analyze conversation dynamics.

Advanced speaker identification solves this through two approaches: role-based labeling and name-based labeling.

Role-based labeling automatically tags speakers by their function: agent versus customer, doctor versus patient, interviewer versus candidate. This works by analyzing acoustic patterns, conversation flow, and contextual signals to determine each speaker's role without requiring training data for specific individuals.

Name-based labeling goes further by identifying speakers by name when you provide a list of expected participants. This is valuable for internal meetings, recorded consultations with pre-scheduled participants, or any scenario where you know who should be speaking.

The alternative, using text-based language models to guess speaker roles after transcription, typically caps out at 75-80% accuracy. Audio-based speaker identification during transcription achieves higher accuracy by leveraging acoustic features that disappear once speech becomes text.

Custom formatting

The difference between a transcript and usable data is formatting.

Contact centers processing tens of thousands of hours monthly need transcripts that flow directly into CRM systems. When phone numbers, email addresses, and dates don't match expected formats, automated workflows fail. What should be a 30-second transcription becomes a 5-minute data entry task.

Custom formatting eliminates this friction by applying your formatting rules during transcription. You define the rules once; every transcript follows them consistently.

Date standardization handles regional variations automatically. A US-based company might need "10/22/2025" while European operations expect "22/10/2025." Global organizations can specify ISO format "2025-10-22" for database compatibility. Without custom formatting, teams must manually convert dates or build parsing logic to handle inconsistent outputs.

Phone number patterns ensure transcripts match your CRM's expected format. Whether you need "(555) 123-4567," "555-123-4567," or international formats, custom formatting applies the pattern automatically. This prevents integration failures when systems expect specific phone number structures.

Contact information converts spelled-out emails and URLs into properly formatted, clickable text. "john at company dot com" becomes "john@company.com." "company dot com slash products" becomes "company.com/products." This seemingly small improvement eliminates a major friction point in automated processing.

Currency and number precision gives you control over decimal places and formatting for financial transcripts. Loan applications, insurance claims, and financial consultations need consistent number formatting for downstream processing.

Multi-rule application lets you apply multiple formatting standards simultaneously. A single transcript can follow US date formatting, international phone patterns, and ISO currency codes, whatever combination matches your systems.

The real pain point here isn't the formatting itself, but the inconsistency. When transcripts arrive in unpredictable formats, you're forced to either build complex parsing logic or accept manual cleanup. Custom formatting makes every transcript predictable.

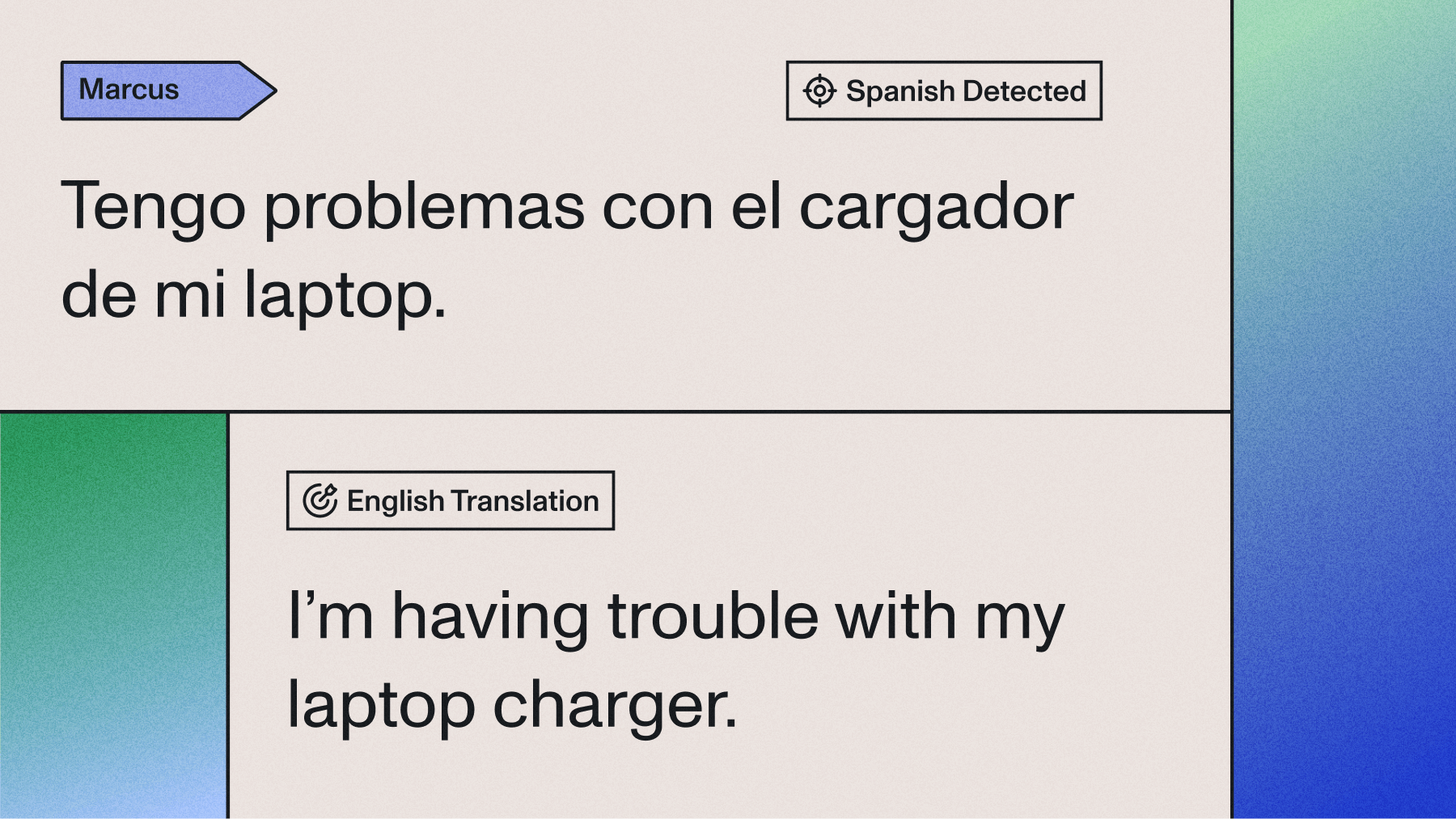

Translation

Serving global customers shouldn't require separate transcription pipelines for each language.

The traditional approach, transcribing audio in the source language, then sending the transcript to a translation API, works but introduces unnecessary complexity. You're managing two separate API calls, handling potential failures at each step, and accepting additional latency from sequential processing.

Organizations expanding into new markets face this complexity at scale. Healthcare platforms operating across multiple countries need consultation transcripts in local languages. Media monitoring companies analyzing African market advertisements need both transcription and translation for millions of audio files. International recruiting platforms need interview transcripts in various languages, without building country-specific infrastructure.

Speech Understanding handles this through integrated translation that processes audio directly into your target language. The system detects the source language automatically and produces a transcript in your specified output language—all in a single API call.

89 languages are supported, covering the major global markets and many regional languages. This includes European languages, Asian languages with different character systems, and languages with limited commercial speech recognition support.

Automatic language detection removes the need to pre-specify the source language for each audio file. This is valuable when processing mixed-language datasets or handling customer interactions where the spoken language isn't known in advance.

Single API integration simplifies your architecture by eliminating the transcription-to-translation pipeline. Fewer API calls mean fewer potential failure points, reduced latency, and simpler error handling.

The business impact's straightforward: you can expand into new markets without rebuilding your speech processing infrastructure. A single integration supports global operations.

Why this matters now

Teams are shifting from "more data" to "smarter outputs."

The explosion of voice data created an initial focus on transcription volume. Can you process thousands of hours per month? Can you handle real-time transcription? These were the critical questions, but the bottlenecks moved.

Companies can transcribe massive volumes, but they're drowning in generic text that requires extensive post-processing before it becomes useful. The challenge isn't capturing voice data anymore. Now it's turning that data into structured intelligence that drives business processes.

This shift is visible across industries:

Voice AI companies building conversational agents need sub-400ms latency with accurate, structured outputs—raw transcripts aren't enough when you're making real-time decisions.

Healthcare platforms require immediate, structured data for clinical decision support. A transcript labeled "Speaker A" doesn't help when you need to know whether the symptom description came from the patient or the clinician.

Enterprise teams can't afford the engineering bandwidth for custom post-processing pipelines. When you're processing tens of thousands of hours monthly, even small inefficiencies compound into significant resource drains.

Without Speech Understanding, teams face three options—none ideal:

- Build complex post-processing pipelines that consume engineering time and introduce maintenance overhead

- Accept reduced accuracy from text-based corrections that can't access the acoustic information in the original audio

- Process outputs manually, losing 20-30% of team time to tasks that should be automated

Speech Understanding eliminates this trade-off by embedding intelligence directly into the transcription process.

Getting started with Speech Understanding

The best transcription API gives you exactly what you need without the post-processing tax.

If your team spends time reformatting transcripts, manually identifying speakers, or managing multiple language pipelines, Speech Understanding tasks directly address those pain points. You're not adding complexity to your stack. Instead, you're removing the custom code and manual processes you've built to work around basic transcription limitations.

AssemblyAI's Speech-to-Text API includes Speech Understanding tasks like speaker identification, custom formatting, and translation alongside core transcription. Test these capabilities with your actual audio data. Configuration takes minutes, and you can start with 100,000 free credits.

The difference between raw transcription and actionable intelligence is often just a few API parameters. Teams processing thousands of hours monthly can eliminate post-processing entirely. You can configure your formatting rules, speaker identification, and target languages once, and every transcript arrives ready to use. That's the shift from more transcription to smarter outputs.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.